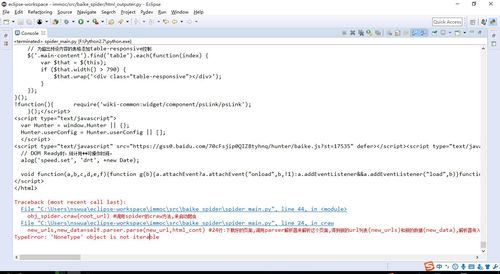

TypeError: 'NoneType' object is not iterable 怎么解決啊?

#coding:utf-8 from?baike_spider?import?url_manager,?html_downloader,?html_parser,\ ????html_outputer class?SpiderMain(object): ????def?__init__(self):????#這些模塊我們需要在各個函數中進行初始化 ????????self.urls=url_manager.UrlManager()??#url管理器 ????????self.downloader=html_downloader.HtmlDownloader()?#html下載器 ????????self.parser=html_parser.HtmlParser()??#html解析器 ????????self.outputer=html_outputer.HtmlOutputer()??#輸出器 ???????? ????#爬蟲的調度程序 ????def?craw(self,?root_url): ????????count=1 ????????self.urls.add_new_url(root_url)??#將入口url添加到管理器,這時url管理器中就有待爬取的url了 ????????while?self.urls.has_new_url():??#循環:判斷url管理器中是否有待爬取的url,即'has_new_url'為True或者False ????????????#try: ????????????new_url=self.urls.get_new_url()?#url管理器中有待爬取的url,通過get_new_url獲取一個待爬取的url ????????????print?'craw?%d?:?%s'%(count,new_url)??#打印正在打印第幾個url ????????????html_cont=self.downloader.download(new_url)?#獲取到一個待爬取的url后,啟動下載器下載這個頁面,結果儲存在html_cont ????????????new_urls,new_data=self.parser.parse(new_url,html_cont)?#24行:下載好的頁面,調用parser解析器來解析這個頁面,得到新的url列表(new_urls)和新的數據(new_data),解析器傳入兩個參數,當前爬取的url(new_url),以及下載好的頁面數據(html_cont) ????????????self.urls.add_new_urls(new_urls)??#解析得到新的url(new_urls),添加到url管理器 ????????????self.outputer.collect_data(new_data)?#收集數據(new_data) ???????????? ????????????if?count==1000: ????????????????break ????????????count+=1 ???????????? ????????????#except:???????????#遇到異常情況的處理(某個url無法訪問 ????????????????#print?'craw?failed' ???????????????? ????????self.outputer.output_html() ???? ???? #首先,編寫main函數 if?__name__=='__main__': ????root_url='http://baike.baidu.com/item/Python'??#設置入口url ????obj_spider=SpiderMain()???#創建實例,SpiderMain為類 ????obj_spider.craw(root_url)?#調用spider的craw方法,來啟動爬蟲

#coding:utf-8 #在每個模塊中創建主程序所需的class class?UrlManager(object): ????def?__init__(self): ????????self.new_urls=set()??#新的和已經爬取過的url全部儲存在?set()中 ????????self.old_urls=set() ???? ????#向管理器中添加一個新的url ????def?add_new_url(self,url):??#(利用這個方法.使self.new_urls中增加一個新的待爬取url) ????????if?url?is?None: ????????????return??#不進行添加 ????????if?url?not?in?self.new_urls?and?url?not?in?self.old_urls: ????????????self.new_urls.add(url) ???? ????#向管理器中添加批量新的url ????def?add_new_urls(self,urls):???#new_urls為網頁解析器解析得到的新的待爬取url列表 ????????if?urls?is?None?or?len(urls)==0:??#如果這個urls列表長度為空或者為None ????????????return??#不進行添加 ????????for?url?in?urls: ????????????self.add_new_url(url)???##注意一下 ???? ????#判斷管理器中是否有待爬取的url ????def?has_new_url(self):????????#has_new_url?用于spider_main中while語句的判斷 ????????return?len(self.new_urls)?!=0???#如果長度不為0,就有待爬取的url ???????????? ???????? ????#從url管理器中獲取一個新的待爬取的url ????def?get_new_url(self): ????????new_url=self.new_urls.pop()??#從列表中獲取一個url,并移除這個url ????????self.old_urls.add(new_url) ????????return?new_url??#返回的new_url用于spider_main中的get_new_url

#coding:utf-8 import?urllib2 #在每個模塊中創建主程序所需的class class?HtmlDownloader(object): ???? ???? ????def?download(self,url): ????????if?url?is?None: ????????????return?None ????????response=urllib2.urlopen(url)??#爬百度百科用的的為最簡單的urllib2代碼,沒有cookie和代理的考慮 ????????if?response.getcode()?!=200: ????????????return?None ????????print?response.read()

#coding:utf-8

from?bs4?import?BeautifulSoup

import?re

import?urlparse

#在每個模塊中創建主程序所需的class

class?HtmlParser(object):

????

????

????def?_get_new_urls(self,?page_url,?soup):???#page_url是什么??page_url是主程序spider_main中給定的參數new_url.

????????new_urls=set()

????????#/item/123

????????links=soup.find_all('a',href=re.compile(r'/item/'))??#compile(r"/item/\d+\\"))??#'\\',前一個\用來取消后一個\的轉義功能

????????for?link?in?links:

????????????new_url=link['href']

????????????new_full_url=urlparse.urljoin(page_url,new_url)?#將new_url按照page_url的格式,生成一個全新的url

????????????new_urls.add(new_full_url)

????????return?new_urls

????

????def?_get_new_data(self,?page_url,?soup):

????????res_data={}

????????

????????#url

????????res_data['url']=page_url

????????

????????#<dd?class="lemmaWgt-lemmaTitle-title">??<h1>Python</h1>

????????title_node=soup.find('dd',class_="lemmaWgt-lemmaTitle-title").find('h1')

????????res_data['title']=title_node.get_text()??#原先寫錯成get_txt()

????????#<div?class="lemma-summary"?label-module="lemmaSummary">

????????summary_node=soup.find('div',class_="lemma-summary")

????????res_data['summary']=summary_node.get_text()

????????

????????return?res_data

????

????

????def?parse(self,page_url,html_cont):???#其中,(page_url,html_cont)就是?spider_main中第24行輸入的參數?(new_url,html_cont)

????????if?page_url?is?None?or?html_cont?is?None:

????????????return

????????soup=BeautifulSoup(html_cont,'html.parser',from_encoding='utf-8')

????????new_urls=self._get_new_urls(page_url,soup)??#創建self._get_new_urls本地方法,這個方法主要是為了find_all所有相關網頁url,并補全url,輸出到new_urls這個列表當中,給spider_main使用

????????new_data=self._get_new_data(page_url,soup)??#創建self._get_new_data

????????return?new_urls,new_data#coding:utf-8

#在每個模塊中創建主程序所需的class

class?HtmlOutputer(object):

????def?__init__(self):

????????self.datas=[]

????

????def?collect_data(self,data):

????????if?data?is?None:

????????????return

????????self.datas.append(data)

????

????def?output_html(self,data):

????????fout=open('output.html','w')

????????

????????fout.write("<html>")

????????fout.write("<body>")

????????fout.write("<table>")

????????

????????#ascii

????????for?data?in?self.datas:

????????????fout.write("<tr>")

????????????fout.write("<td>%s</td>"%?data['url'])

????????????fout.write("<td>%s</td>"%?data['title'].encode('utf-8'))

????????????fout.write("<td>%s</td>"%?data['summary'].encode('utf-8'))

????????????fout.write("</tr>")

????????

????????fout.write("</table>")

????????fout.write("</body>")

????????fout.write("</html>")

????????

????????

????????fout.close()

按照評論分享的代碼重新改了幾個地方,但依然出現這個錯誤.不知道錯在哪里了,代碼以上.

2018-01-04

頁面 ?html_downloader.py

15行 ??print?response.read() ? 更改為 ?return?response.read()